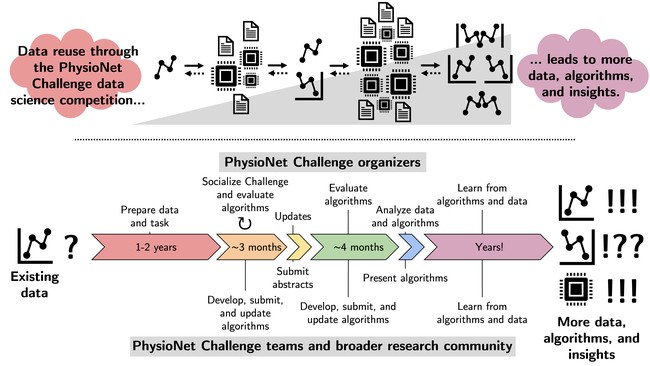

The PhysioNet Challenges are annual data science competitions that reuse and improve data. For over 20 years, the Challenges have curated and used high-quality datasets to facilitate the development, evaluation, and sharing of generalizable algorithms from a diversity of researchers. For each Challenge, we define a clinical problem, post training data, and evaluate the submitted algorithms on the hidden validation and test data. While similar to other data science competitions and individual workflows, there are many key differences that ensure the scientific contributions of the PhysioNet Challenges. The PhysioNet Challenges wring unrealized value from data, even well-used datasets, through data use and reuse and algorithm development.

The PhysioNet Challenge team has a senior faculty member, Gari Clifford; a junior faculty member, Matthew Reyna; multiple postdoctoral trainees that Dr Clifford and Dr Reyna supervise; annually rotating expert faculty and clinical collaborators; and invaluable contributions from the larger research community.

Dr Clifford and Dr Reyna invite one or more trainees to join the Challenge team. Each Challenge provides trainees with opportunities to work directly with large, real-world clinical databases over a thousand diverse codebases. Many of the trainees who worked on the Challenges as trainees have gone on to faculty or industry positions, including Dr Clifford and Dr Reyna.

Clinical experts typically propose the Challenge and initial data, and Dr Clifford and Dr Reyna invite other faculty and clinical collaborators to help shape the data, tasks, and evaluation metrics that effectively define each Challenge. We also seek collaborators with backgrounds that enrich the representation of the Challenges.

The research community is also an integral part of the PhysioNet Challenge team. The community often identifies issues in the data, including unappreciated issues in existing databases that have been used for years.

The PhysioNet Challenges are part of PhysioNet Resource, which was established in 1999 by Ary Goldberger and Roger Mark at MIT. PhysioNet’s original and ongoing missions are to conduct and catalyze biomedical research and education by providing free access to large collections of physiological and clinical data and related open-source software.

The PhysioNet Challenges are annual data science competitions that were established in 2000 by George Moody from MIT and led by him from 2000 to 2014. The Challenges have been led by Gari Clifford from Emory BMI since 2015 and co-led by Matthew Reyna from Emory BMI since 2019.

The Challenges invite researchers from both academia and industry to develop algorithmic solutions to important biomedical problems. The Challenges introduce and reinforce standards for open and reproducible data, research, and education in clinical informatics and machine learning, and they have advanced the state-of-the-art in the topics considered by PhysioNet.

Based on our experiences as the organizers of the PhysioNet Challenges, we recommend following practices for data reuse:

● Find even more data to learn more about your data.

● Pose an interesting and relevant problem for the data to motivate data reuse.

● Check and double check the data.

● Convert the data to open and standard data formats.

● Share the data publicly, accessibly, and freely.

● Do not share all of the data to increase the impact of the data you share. This advice may sound counterintuitive, but private data allows us to accurately assess algorithm generalizability; a public test set is no longer a test set.

● Share code for reading and using the data.

● Develop algorithms that learn from and improve the data.

● Develop meta-algorithms that learn from and improve the algorithms!

● Ensure that the algorithms that use the data are usable, reusable, reproducible, and generalizable.

● Share the algorithms (and meta-algorithms) from the community.

● Encourage, support, and incentivize researchers to present and publish their work on the data.

● Develop a diverse team of researchers.

We share these practices along with the Challenge data, algorithms, evaluation metrics, and results through preprints, open-access publications, public talks, GitHub repositories in https://github.com/physionetchallenges, and https://PhysioNetChallenges.org.

The PhysioNet Challenges revolve around data: the data help us to develop algorithms, which in turn help us to better understand, characterize, and often correct the data. Therefore, the replicability of research using the Challenge data and algorithms is essential for their research impact:

● We check the raw data for errors, PHI, and other data issues.

● We perform unit checks on the data for physiologically plausibility and consistent encoding.

● However, we intentionally preserve realistic quirks in the data, such as data entry errors, so that the teams can design their code to address them.

● We convert the data into standard and efficient formats; we use standardized data formats whenever possible.

● We implement and share open-source code to read the data in multiple programming languages; we use existing open-source libraries whenever possible.

● We implement and share open-source minimal working examples in multiple programming languages.

● We apply unit checks to the algorithms and algorithm outputs to ensure predictable and consistent performance.

● We require all algorithms to use consistently formatted inputs and outputs and to solve the same task.

● We require the teams to submit their full, containerized code for reproducibility.

● We run the code to ensure that it actually runs.

● We evaluate the algorithms on hidden data to assess generalizability.

● We incentivize teams to present and publish their work.

● We share the data and algorithms freely with the community.

The PhysioNet Challenges would not exist without community engagement and outreach. Time and again, we have found that sharing and reusing data with the broader community as part of the Challenges begets more data, better data, and more and better insights into data. For existing datasets, it provides a second life to data that advances the state-of-the-art in the domain of the data. This reinforcing feedback loop between data and algorithms advances the field, but community involvement is essential for achieving it. By sharing data through the Challenges, and a public forum, a new generation of researchers becomes focused on an old topic, injecting novelty and innovation into existing problems. By understanding the benefits, they are more likely to share data and code themselves, which has been shown to lead to higher citations and a higher exposure that boosts careers. Dr Clifford is a key example of this, landing his postdoc at MIT because of the 2002 Challenge, and his sharing of code on PhysioNet. The publications describing the Challenges routinely garner hundreds of citations, illustrating how this sharing process reaches a large community.