(please see attached document)

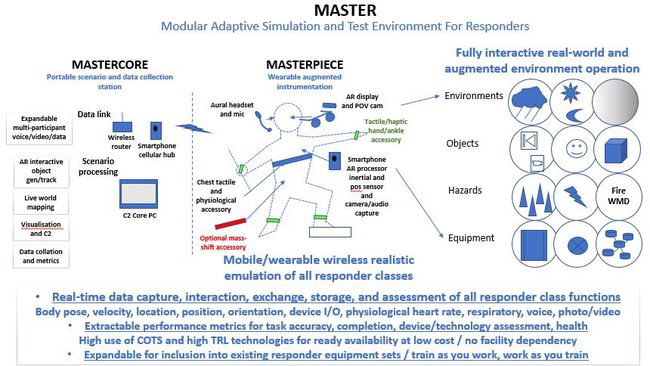

The MASTER system is composed of two (2) primary subsystems: (1) an enabling core subsystem (MASTERCORE) providing both augmented reality simulation and environment generation & central communications/data exchange and command and control element; and (2) an individualized sensor & display/control/communications operation element (MASTERPIECE) worn by each participant.

The MASTERCORE subsystem includes: (a) a laptop hosting the scenario processing & data hub/command status and storage unit; (b) mapping software for generating 2D and 3D facility overlays; (c) augmented object overlay generation server & companion client application sw; (d) a communications hub unit customized for wireless interoperation (WiFi router, cellular hot spot) extendable for integration with existing tactical radio systems.

The MASTERPIECE wearable system includes: (a) a smartphone hosting client application software capable of real time augmented scenario-specific object rendering (similar to the Samsung Android S6 or higher) w/ self-contained power, photo & video camera capability, audio communications, data capture/exchange/storage/entry, and user interface & control; (b) a dual eye augmented/AR overlay display (similar to Vuzix AR3000) capable heads up display (HUD) worn as glasses & integrated w/ a separate inertial sensor capability for head tracking/orientation and a point of view (POV) camera; (c) an audio headset element with earpiece & microphone (this & AR glasses connected to the smartphone element); (d) separately arrayed wirelessly connected (via BTE) tactile & haptic feedback sensors at the hands & feet, each w/ an integrated power supply & inertial sensor; (e) an integrated tactile, inertial, & heart rate & respiration sensor chest-mounted element.

Key to the MASTER solution is the use of see-through augmented reality (AR) enabled in real time for each participant in which objects and other elements may be injected as an overlay in the existing real world environment and interacted with for further task and execution realism. The AR injects use-case specific object presence images, and audio and visual based environmental effects (blurred/fogged vision, sounds of fire, etc.) to the participant and enables interactivity of virtualized or real (selected) objects (doors, switches, device controls). The AR additionally can inject hazards such as fires or shooters and emulate specific victim or event conditions into the field of view and hearing of the participant’s visual and aural space. The AR is provided in a compact dual eye glasses solution with wearable point of view (POV) camera suitable for facemask and helmet wear and integrated with orientation and camera sensors, while the audio is provided via headset and mic.

A second key is the use of smartphones as the basis for the wearable processor and data/device and communications interface device which additional enables core body inertial and location measurements, initial photo and video capture, and even select environmental data such as temperature to be captured. Existing wireless and/or cellular supported infrastructures can be exploited or expanded to create multi-participant communications and exchanges including form/document, photographic, audio file, and other media and data. Data interfaces and protocols are standardized for easy exchange. Existing tactical communications systems may be interfaced as well.

Selected accessories and sensors including uniquely developed tactile/haptic and body pose sensors augment the self-worn MASTER system and are self powered and interconnected, creating an easily donned instrumentation system suitable for collection and exchange of location, orientation, body position, object tracking, physiological, visual/aural, and other data. Additional accessories such as portable flash rigs, smoke pots, and simulated equipment may be selectively added to the system if desired.

A rapidly deployable portable command center (C2) core element maintained on a portable laptop includes software to enter mapping of the selected real-world area, and to develop and serve selected AR-based object material such as use-case required debris, furniture, blockages, victims, tools, controls, etc. to the participants via client applications hosted on their smartphones. Software based on open source ARToolkit elements and the Unity game engine are used to enable real time environmental condition (fog, rain) visual and audio effects injected to the self-worn MASTER wearable system as well as to monitor both interactivity of AR enabled objects (including proximity alerts, action state, and presence) and collect and exchange ongoing data during any operational event.

The C2 additionally may be extended to exploit existing tactical communications by the inclusion of existing COTS tac-comm to VoIP or cell bridge systems, enabling participants to fully operate w/ their existing C2 equipment.

(please see attached document)

The MASTER system's simple architecture, COTS/high TRL wearable low cost enablement, and straighforward design implementation enable highly accurate regeneration of systems.

The MASTER system's high use of COTS and simple high TRL based accessories combined with a low cost wearable architecture enables easy availability of the design. Use of augmented reality and wearable instrumentation enables any-where availability of deployment and use.

The design of the MASTER system is intentionally modular, compact, and mobile such that deployment to operation should be <1hr at any site location.

Specifically, the MASTERCORE C2 element laptop and communications equipment aids may be deployed from a single Pelican ™ rugged case by one individual.

The MASTERPIECE wearable systems comprised of AR glasses/camera/sensors, smartphone/mount, and hand/ankle and optional chest/torso sensor/tactile elements may be assembled on a single participant within 15 minutes. Existing user equipment and tools are again available for use with the system.

Prior to deployment, the generation of a site-specific/use case-specific scenario is performed using a scanned or manually entered estimation of the location map captured in a registrable 2D/3D shapefile. Specific objects, victims, virtual participants, and interactive options and decision aids are selected from a pre-developed library or uniquely generated in ARTools for use in the Unity server and client applications to present the correct augmented reality environment and actions.

Operation of the MASTER system is conducted via simple execution of the AR scenario with real-time data exchange, voice, video, and action status occurring between the MASTERPIECE-equipped participants and the MASTERCORE C2. Real-time task execution scoring, device performance evaluation, and additional monitoring/modification and evaluation may be performed at either the MASTERCORE C2 or by an evaluator wearing a separate MASTERPIECE system as well.

Storage of all collected data at the wearable and deployed systems may be collected post-scenario for after action assessment, data pattern collection and analysis, and further performance metric extraction.

(please see attached document)

The MASTER's use of augmented environments (Visual/audio injections) creates realistic yet safe operational execution for hazards and exposure to elements.

The addition of tactile and haptic/thermal accessories creates additional realism of equipment use and environmental exposure without exposure to actual and uncontrolable elements.

The wearable solution enables use of existing protective clothing and equipment during test and evaluation as well. The choice of an instrumented wearable system further improves safety by allowing existing clothing/tools/processes to be retained without modifications due to limitations or dependencies found at other instrumented site/facility solutions.

The extension of captured measurement monitoring such as body movement/respiration/etc. can aid monitoring of real-time performance and health as well.

(please see attached document)

The primary enabling function of the MASTER system is the use of an augmented reality overlay software-based capability derived at a hostable server device working in conjunction with an environment software-generating game engine able to render objects and features such as visual and hazard effects to render any situation as overlays within any real world site/location.

Objects and hazards and interactive elements are generated using 3D rendering and effects tools such as the open source ARToolkit which can derive elements such as furniture to block progress/move aside, blankets to pick up/cover objects with, movable doors, victims/other personnel, and light and device switches and controls. When joined with an environmental rendering game engine (such as the Unity Technologies’ Unity) and the area map created previously, the generated objects may be superimposed into the point of view of each participant using a separately controllable client software component hosted on each participant’s smartphone (similar to Apple’s Augment app) to coordinate and unify presented events/actions and object interactions as if existing in the real environment on which they are overlayed.

The participant’s orientation, body pose, location, and other data to inform the core element of where exactly to place the augmented overlaying objects is provided by body-worn inertial and orientation sensors and location sensors (GPS) hosted natively within the smartphone core used as the wearable participant’s central processor and controller. Additional inertial elements (similar to the InterSense InertiaCube 4) enabling pitch/roll/yaw/velocity/orientation estimations are provided at the head (integrated with the augmented display unit), the chest (integrated with the heart rate and respiratory sensors), and at each hand and foot (integrated with companion tactile and haptic/thermal effects devices) described further.

By combining multiple separate inertial sensors located at key motion anchors of the participant (head, chest/torso, hands, feet/ankles), a fully rendered estimate of the actual body pose can be correlated (with separately derived location) by the MASTERCORE to accurately place augmented objects at the correct proximity of the participant in real time.

For scenario development, a separate task-focused software element is utilized to generate both a waypoint-based timeline of events and a series of decision points or aids to enable flexibility of the scenario when executed. Task completions and guidance may be interchanged between participants and the C2 operator with modifications occurring in real time. Data such as body pose, action status, task status, photos, audio captured, video, and entered form data is created both passively and actively by the participant’s wearable system and their actions and interchanged with other participants and the MASTERCORE C2 element using standardized data formats and protocols (MP3, MPEG, XML and select ASCII, HTML).

The specific scenario task summaries, benchmark and schedule planning, task and performance capture, decision aid suggestions, data capture, and select display shell for operations is intended to be developed within an HTML5 based web application specific to the MASTER system needs. This incorporates the ARToolkit, Unity, and related smartphone / communication software development kits (SDKs) with scalable HTML/Javascript, creating a simplified and extendable application solution.

(please see attached document)

The MASTER's use of augmented interactive object overlays and selectively worn tactile/haptic units enables full environmental simulation and opportunity to utilize actual equipment/clothing and tools within any real-world location.

The use of augmented interactive object overlays and wearable mobile systems enable multiple responder equipment configurations to be used with actual real world locations including mobile platforms and selected physical tools and structures.

The core of the capability is provided via a current smartphone (such as Samsung’s S6 and S8) which includes multiple wireless and wired interfaces for the additional wearable equipment to connect. Additionally, the phone is utilized for data acquisition and storage and exchange via the existing wireless/WiFi and/or cellular capabilities selected by the participants for inter-participant and inter-C2 exchange. The phone additionally includes GPS and wireless / cellular triangulation location capability, enabling self locating of the participant autonomously.

Visual data is derived via use of the ARToolkit-generated client application and the ARToolkit-Unity served environmental software at the MASTERCORE C2 element for display via the AR glasses element. The AR glasses display element can be enabled using waveguide optics to present augmented overlay data and information within the field of view of a participant using a see-through optical path (no blockage to the real world to the eye), enabling a true environmental fusion of simulatable objects/hazards/actions and existing facility/area elements.

Audio data specific to the scenario environment (additional voices, sounds of objects and weather, etc.) is additionally generated and passed from MASTERCORE to MASTERPIECE for injection to the audio stream and mixed with inter-participant/C2 audio for presentation to the headset. Environmental audio data is captured by the headset and smartphone microphone for collection. Sensor data (inertials, body worn physiologicals, step/velocity interpolations, body pose/orientation/pointing/location, task actions/completions, device evaluation data, etc.) are stored at the MASTERPIECE smartphone and can be relayed between participants and downloaded for MASTERCORE storage and analysis as well.

Unique to MASTER is the proposition of separately worn tactile/haptic elements at the hands and ankles to emulate additional realism. The units are composed of an inertial sensor, a tactile sensor (to create patterned vibrations), and a thermal generating element and separate power source. The components are assembled to a linear Velcro-enabled strap and worn at the hands and ankles to enable body pose measurement for object orientation and to provide unique vibratory and thermal feedback. During scenario operation, the participant can receive select hazard area alerts (danger: vibration), equipment feedback (operation of motor: vibration), or other hazard (increasing heat from thermal element if close to hot fire/doorknob) through the setting of pre-existing hazard ranges or operator control. The chest sensor element includes physiological sensors and (future growth) environmental sensors (temperature) as well as similarly separate inertial and tactile elements.

An additional novel implementation includes the use of a shifting metal mass encased in a smooth compartment and operated on by two (2) electromagnets at opposing sides of the lower back in an extension of the chest element or as a separately worn accessory across the lower back. When programmed, the electromagnets may operate in sequence to cycle the mass side to side, providing the direct sensation of a pitching floor or water by shifting the wearer’s mass and thus balance impression.

Communications including voice and data (form entered, discrete measurement, and photographic and video and environmental sound data) are provide by the inherent wireless and cellular capabilities of a companion smartphone similar to those worn by the individual participants. For wireless operation, a cellular hot spot may be created sufficient for establishing wireless communications. For cellular operation, existing 3G/4G operations via the smartphones can be leveraged simply. For areas without cellular operation, COTS-provided cellular hub elements (such as those by Qualcomm) may be deployed to create a localized cell network, with companion wireless routers utilized to extend coverage throughout the area as needed.

A unique but common (to the responder community) effort with MASTER is to additionally enable existing tactical communications equipment interfacing via bridging systems common to the Responder community (such as those provided by Trident or Codan/Daniels) that enable voice and data repeating and interface from HF/VHF/other communications systems into TCP/IP based VoIP and networked operations.

(please see attached document)

The MASTER system's enhanced body pose inertial recording and uniform wearable solution enables highly accuracte and repeatable actions by multiple participants. The MASTER utilizes commonalized data and interface protocols (WiFi, Cellular 3G/4G, BTE, HTML5, AR shapefile, Javascript, XML/select ASCII) to enable rapid device integration and intra and inter-system interoperability with the wearable, deployed core, and external systems.

By virtue of instrumenting the user (rather than a fixed site location) and enabling augmented injection of objects, hazards, and environmentals interactively to a real-world overlay of any real-world environment, the MASTER system may be used literally anywhere, making any facility (existing or otherwise) a valid test and evaluation or training site with full instrumentation to evaluate task execution, technologies, and performance in a safe and repeatable manner.

By enabling software-modifiable visual/aural and tactile/haptic environmentals, any weather and hazard condition can be safely emulated regardless of location or time of day in any location.

By use of non-equipment conflicting force-feedback and thermal elements included in the MASTER accessories, realistic impact, heat, and other feedback while using actual user equipment is provided and actual process/methods and actions are utilized.

The MASTER may be infinitely customized for any responder user group or use case through software-based selection of objects, use case scenarios, tasks and task order, and other tailoring while maintaining standardized metrics and data capture through the use of the MASTER core system and its accessories.

By enabling manual or scanned blueprint facility location entry, any site location may be easily entered and registered within the MASTER system for coordination and use.

By ensuring standardized data interfacing and use of commonly available protocols such as TCP/IP, MP3, MPEG, Bluetooth/LowPower, WiFi, 4G cellular, Shapefiles, XML, and VR exchange formats, any additional technologies and equipment (as well as existing test facilities and other simulation equipment or stand-alone trainers) can be easily integrated and data exchanged amongst all MASTER participants in the future

The MASTER system’s wearable-based portability and real-world deployment enables anywhere/anytime/any scenario utilization with actual equipment and no dependencies or limits due to site/facility constraints. The MASTER system’s use of augmented reality interactive object overlays and centrally coordinated task and data collection enables infinite scenario creation capabilities and a wide array of capturable measurements.

Physical equipment handling: Due to the MASTER’s use of wearable instrumentation and virtualized/augmented interactions, existing user equipment may be used to increase test/training realism.

Interfaces and data exchange: The use of communalized data protocols and interfaces enables any device integration with the MASTER core elements for specific testing and future upgrading.

The MASTER system’s use of common data interfaces and protocols and scalable software architecture elements provides significant “future proofing” to enable the addition of new solutions and modification of functions going forward. Some select areas identified for near-term integration and enhancement are likely including: (a) additional location/indoor focused position aids such as beaconing to enhance localization accuracies, (b) expanded tactile/haptic unit number and placement on wearer’s body to expand realism, (c) additional voice and gesture control elements to further simplify/make more realistic the control and use of the MASTER system, (d) further equipment integration to minimize the MASTER system elements and enable simpler deployment and use.

The primary follow on for MASTER is in the potential for the system to become fully integrated into the daily operations of first responders to enhance current process and methodologies at a low cost.

(please see attached document)

The estimate of cost for a minimally deployable system (2 wearable units plus support C2 system) is $14,440.00.

The majority of the MASTER system is purchasable as current COTS items. The tactile/haptic units proposed are high TRL/COTS hybrids and constructed simply. The development software exists currently with the specific AR object library, injection/scenario track shell development to be completed.

The specific per-system components and estimates are as follows: (please see attached document) for a minimally demonstratable MASTER system (2 participant users and C2 core)

AR glasses (1 per)

Smartphones (1 per)

Headsets (1 per)

Tactile/haptic wearable units (4 per)

Chest tactile/physiological unit (1 per)

PC Core (1)

AR Development server/client application sw

Mapping sw

Scenario HTML5 shell sw

(SW is defined in attached document)

The above includes custom work to develop the tactile/haptic hand and leg units to include small thermal element, tactile element, and inertial and power elements. Estimates for software include known availability pricings or open source availability and does not include time of development.

The above does not include potential work for the proposed shifting weight mass unit if desired to mimic/emulate sliding floor or rocking boat scenarios.

(please see attached document)

A complete user class/use case capability and desired action evaluation was performed for this effort to validate all responder class capabilities are successfully performed by the MASTER system. The actions define the desired scope of augmented object/action/interactions/data sets required to be included within the MASTER system and include six(6) user classes .

The MASTER system’s wearable-based portability and real-world deployment enables anywhere/anytime/any scenario utilization with actual equipment and no dependencies or limits due to site/facility constraints.

The MASTER system’s use of augmented reality interactive object overlays and centrally coordinated task and data collection enables infinite scenario creation capabilities and a wide array of capturable measurements.

For scenario development, a separate task-focused software element is utilized to generate both a waypoint-based timeline of events and a series of decision points or aids to enable flexibility of the scenario when executed. Task completions and guidance may be interchanged between participants and the C2 operator with modifications occurring in real time. Data such as body pose, action status, task status, photos, audio captured, video, and entered form data is created both passively and actively by the participant’s wearable system and their actions and interchanged with other participants and the MASTERCORE C2 element using standardized data formats and protocols (MP3, MPEG, XML and select ASCII, HTML).

The specific scenario task summaries, benchmark and schedule planning, task and performance capture, decision aid suggestions, data capture, and select display shell for operations is intended to be developed within an HTML5 based web application specific to the MASTER system needs. This incorporates the ARToolkit, Unity, and related smartphone / communication software development kits (SDKs) with scalable HTML/Javascript, creating a simplified and extendable application solution.

By virtue of instrumenting the user (rather than a fixed site location) and enabling augmented injection of objects, hazards, and environmentals interactively to a real-world overlay of any real-world environment, the MASTER system may be used literally anywhere, making any facility (existing or otherwise) a valid test and evaluation or training site with full instrumentation to evaluate task execution, technologies, and performance in a safe and repeatable manner.

By enabling software-modifiable visual/aural and tactile/haptic environmentals, any weather and hazard condition can be safely emulated regardless of location or time of day in any location.

By use of non-equipment conflicting force-feedback and thermal elements included in the MASTER accessories, realistic impact, heat, and other feedback while using actual user equipment is provided and actual process/methods and actions are utilized.

The MASTER may be infinitely customized for any responder user group or use case through software-based selection of objects, use case scenarios, tasks and task order, and other tailoring while maintaining standardized metrics and data capture through the use of the MASTER core system and its accessories.

By enabling manual or scanned blueprint facility location entry, any site location may be easily entered and registered within the MASTER system for coordination and use.

By ensuring standardized data interfacing and use of commonly available protocols such as TCP/IP, MP3, MPEG, Bluetooth/LowPower, WiFi, 4G cellular, Shapefiles, XML, and VR exchange formats, any additional technologies and equipment (as well as existing test facilities and other simulation equipment or stand-alone trainers) can be easily integrated and data exchanged amongst all MASTER participants in the future

Lastly, the MASTER may be scaled for numerous users through the expansion of the proposed wireless/cellular LAN element and C2 elements using emplaceable wireless routers and/or cellular hubs.

(please see attached document)

A complete capabilty/action and functional assessment of desired NIST metrics was performed to validate all responder functionalities are successfully performed by the MASTER system. The primary user classes include seventeen (17) targeted use cases which decompose to forty (40) specific functionall areas to measure. The measurement base defines the sensors utilized and the extractable metrics available for MASTER analysis and performance evaluation.

The MASTER system measurement base includes extractable time and location/ georegistered/ orientation and body pose-marked sensor measurement and action state elements which are sufficient to derive all classes and metrics of performance as stated by NIST for this effort.

In short, the desired dimensions of time, accuracy, efficiency, and action sufficient to relate to the identified measurements and performance metrics can be successfully derived via the MASTER-defined sensor suite of self-contained inertials, position sensors, physiologic heart rate/respiratory, phot/video/audio capture, and augmented and performed action state/status data. Additional measurement data from selected devices/instruments are also capturable via the MASTER subsystems.

The capture of time unified real-time based inertial, orientation, physiological, position/geolocation, specific body pose, voice, photo/video, and augmented interactive action completions enables the MASTER system to extrapolate significant performance metrics including time to task completions, estimates of accuracy in performance, opportunities to assess physical state/ exhaustion, and successful administration of medical or other specific process and efficiency functions.

(please see attached document for by-function breakdown):